Robot behaviors are often built as computational graphs, with data flowing from sensors to compute technologies, all the way down to actuators and back. To obtain additional performance, robotics compute platforms must map these graph-like structures efficiently to CPUs, but also to specialized hardware including FPGAs and GPUs.

First published at https://www.roboticsbusinessreview.com/opinion/building-robot-chips-modern-compute-architectures-in-robotics/

Traditional software development for robotics systems is largely a function of programming central processing units (CPUs). However, due to the inherent architectural constraints and limitations of CPUs, these robotics systems often exhibit processing inefficiencies (indeterminism), high levels of power consumption and security issues. Indeed, building robust robotics systems that rely solely on CPUs is a challenging endeavor.

Robotics Compute Platforms

Compute AtchitecturesAs the demands for advanced capabilities in robotics systems have increased, several companies have released dedicated robotics and edge AI platforms that provide for high performance compute, secure connectivity, on-device machine learning and more. Examples include Xilinx’s Versal AI Edge, Qualcomm’s Robotics RB5 Platform and NVIDIA’s Jetson family of systems-on-a-chip (SoC).

These robotics and AI platforms pack a variety of compute resources including CPUs, digital signal processors (DSPs), graphics processing units (GPUs), Field Programmable Gate Arrays (FPGAs) and application-specific integrated circuits (ASICs), among others. They allow roboticists to build flexible compute architectures for robots, but require one to use the right tool for each task to maximize its performance, a process that can be complex and confusing.

This article discusses the pros and cons of the various compute resources available to roboticists, and provides additional perspectives on them as modern compute architectures for robotics systems.

Scalar Processors (CPUs)

Scalar processing elements (e.g. CPUs) are very efficient at complex algorithms with diverse decision trees and a broad set of libraries. However, performance scaling is limited.

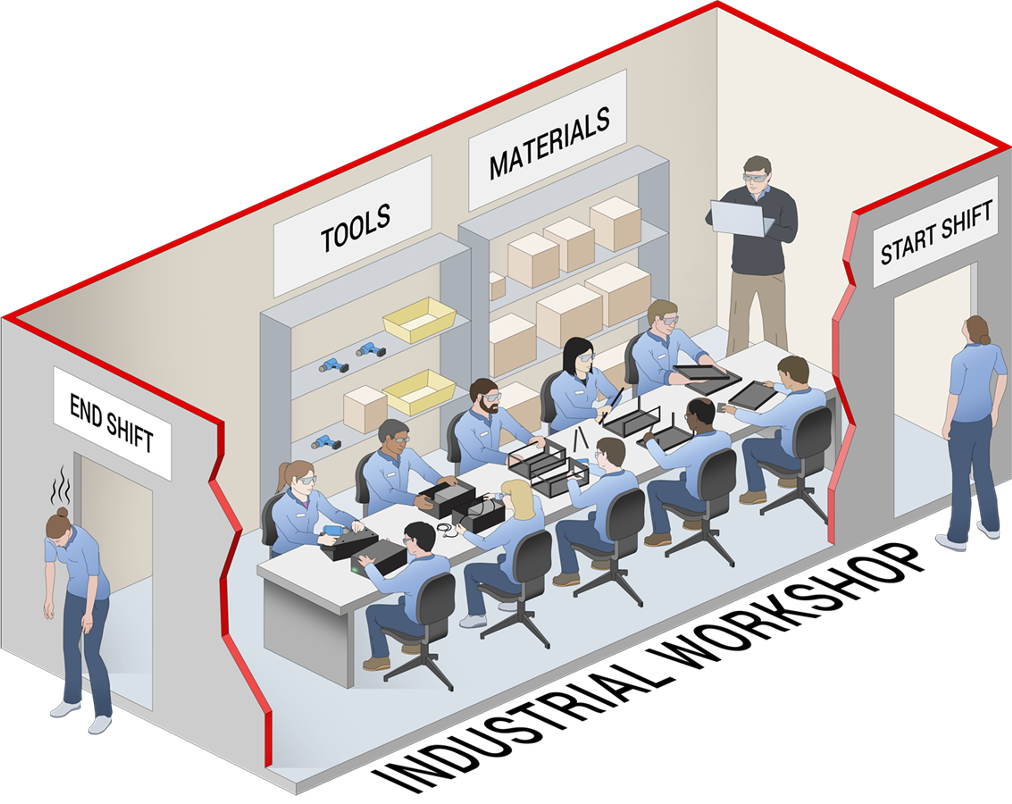

A CPU with multiple cores can be understood as a group of workshops in a factory, each employing very skilled workers. These workers each have access to general-purpose tools that let them build almost anything. Each worker crafts one item at a time, successively using different tools to turn raw material into finished goods. The workshops are mostly (disregarding the cache) independent, and the workers can all be doing different tasks without distractions or coordination problems.

Compared to CPUs, vector processing elements (e.g. DSPs, GPUs) are more efficient at a narrower set of parallelizable compute functions. However, they experience latency and efficiency penalties because of their inflexible memory hierarchy.

Although CPUs are highly flexible, their underlying hardware is fixed. Most CPUs are still based on the Von-Neumann architecture (or more accurately, stored-program computer), where data is brought to the processor from memory, operated on, and then written back out to memory. Fundamentally, each CPU operates in a sequential fashion, one instruction at a time, and the architecture is centered around the arithmetic logic units (ALU), which requires data to be moved in and out of it for every operation.

In today’s modern robot architectures, scalar processors play a central role. The use of CPUs for the coordination of information flows across sensing, actuation and cognition is fundamental for robotics systems. Moreover, the Robot Operating System (ROS), the widely adopted software framework for robot application development, is designed in a CPU-centric manner.

Vector Processors (DSPs, GPUs)

Compared to CPUs, vector processing elements (e.g. DSPs, GPUs) are more efficient at a narrower set of parallelizable compute functions. However, they experience latency and efficiency penalties because of their inflexible memory hierarchy.

Following the factory metaphor described earlier, a GPU also has workshops and workers, but it has considerably more of them, and the workers are much more specialized. These workers have access to only specific tools and can do fewer things, but they do them very efficiently.

GPU workers function best when they do the same few tasks repeatedly, and when all of them are doing the same thing at the same time. After all, with so many different workers, it is more efficient to give them all the same orders. As such, vector processors address one of the major drawbacks of CPUs in robotics — the ability to process large amounts of data in parallel.

Programmable Logic (FPGAs)

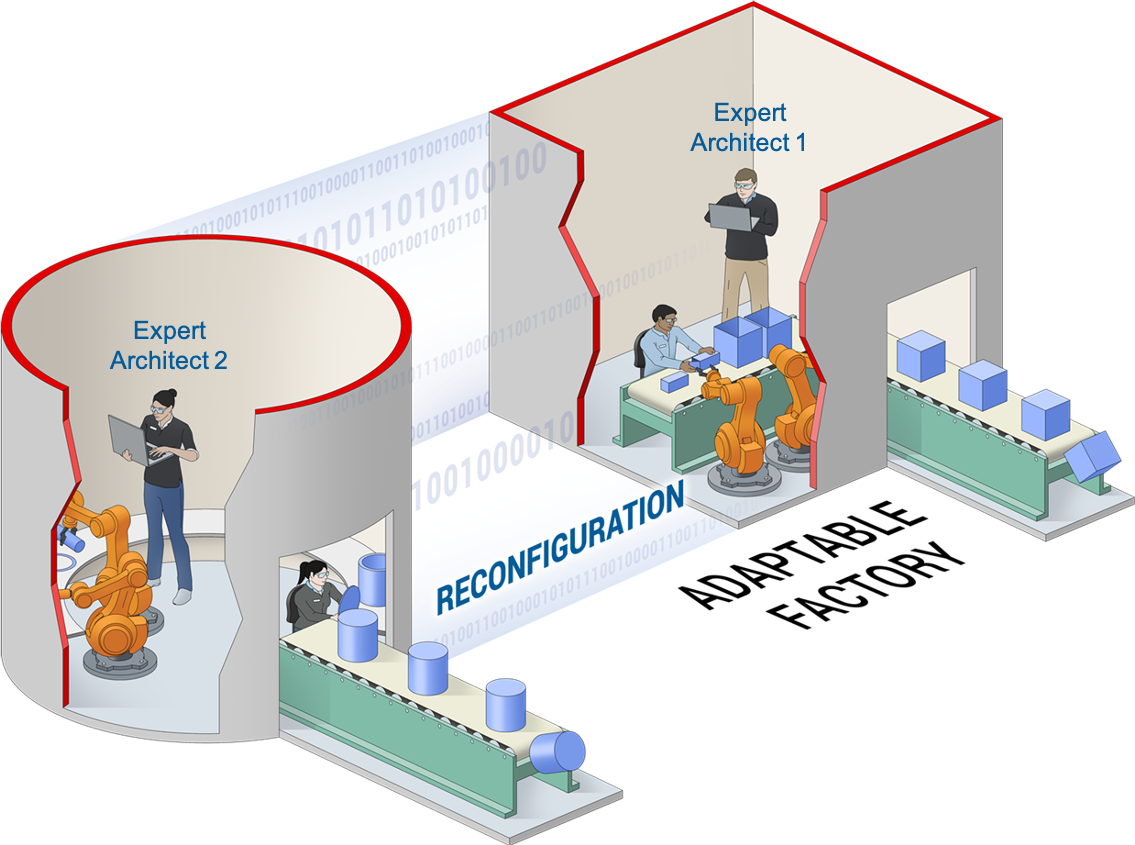

Programmable logic (e.g. FPGAs) can be precisely customized to a particular compute function, which makes them well suited for latency-critical, real-time applications. These advantages, however, comes at the cost of programming complexity. Also, reconfiguration and reprogramming require longer compile times compared to scalar and vector processors.

Using our factory metaphor, FPGAs are flexible and adaptable workshops, where architects can deploy assembly lines and conveyor belts customized for the particular task at hand. This adaptability means that the FPGA architects get to build assembly lines and workstations, and then customize them for the required task instead of using general-purpose tools and memory structures.

In robot architectures, FPGAs empower the creation of runtime-reconfigurable robot hardware through software. Software-defined hardware for robots excel at data flow computations, as statements are executed as soon as all operands are available. This makes FPGAs extremely useful for interfacing with sensors, actuators and dealing with networking aspects. Moreover, FPGAs can create custom hardware acceleration kernels with unmatched flexibility, making them an interesting alternative to vector processors for data processing tasks.

Consider robotics systems utilizing Robot Operating System, which is becoming increasingly common for robotics development of all types. With ROS, robotics processes are designed as nodes in computational graphs. Robotics compute platforms must be able to map these graph-like structures to silicon efficiently.

Application-Specific Integrated Circuits (ASICs)

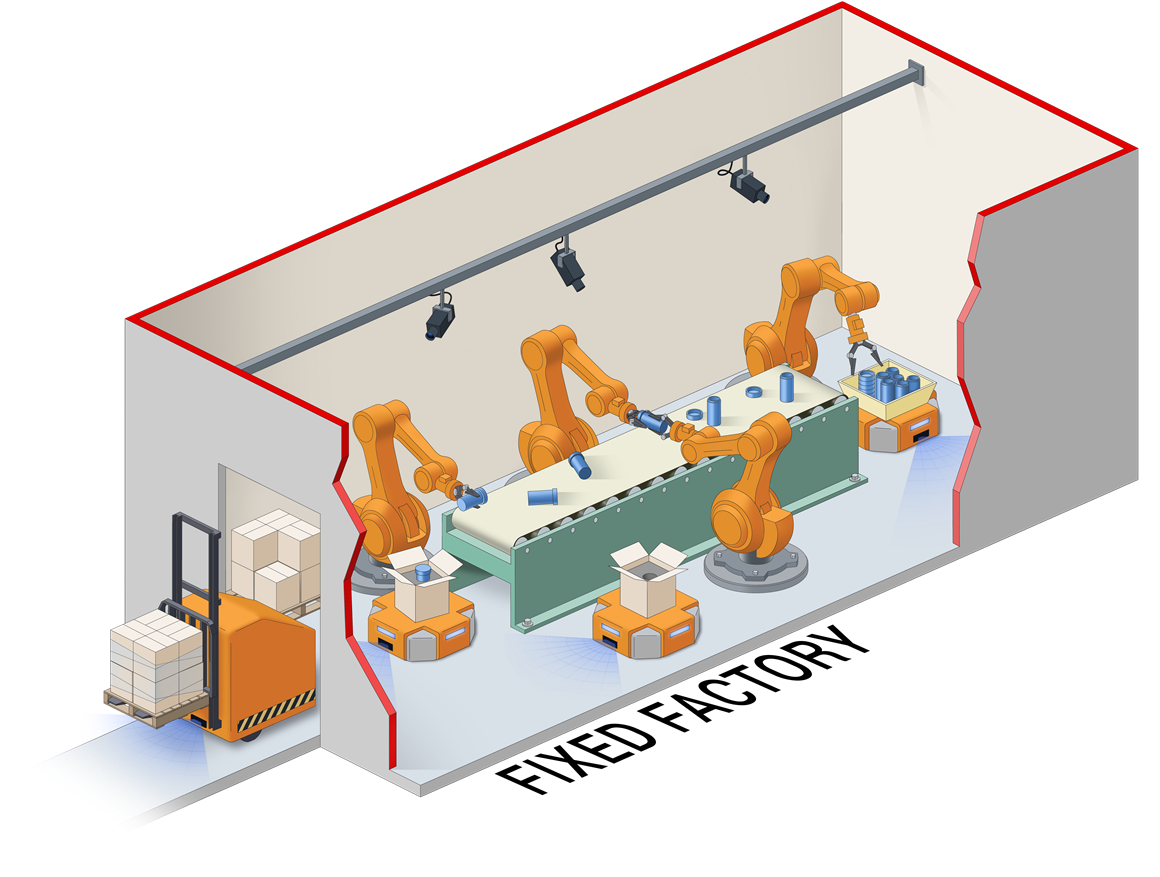

Continuing with our factory analogy, ASICs, like FPGAs, build assembly lines and workstations, but unlike FPGAs, ASICs are final and cannot be modified. In other words, in ASICs’ workshops the assembly lines and conveyor belts are fixed, allowing no changes in the automation processes. The ad hoc, fixed architectures of ASICs provides unmatched performance and power efficiency, as well as the best prices for high-volume mass production.

Unfortunately, ASICs take many years to develop, and do not allow for any changes. Conversely, robotics algorithms and architectures continue to evolve rapidly, and as such robot-specific ASIC-based accelerators can fall months or even years behind the state-of-the-art algorithms. While ASICs will be instrumental in in some robotics systems going forward, the use of ASICs in robot architectures remains limited.

Network of Networks

Robots are inherently deterministic machines. They are networks of networks, with sensors capturing data, passing to compute technologies, and then on to actuators and back again in a deterministic manner.

These networks can be understood as the nervous system of the robot. Like human nervous systems, real-time information passing across all networks is fundamental for the robot to behave coherently. The Von-Neumann based architectures of scalar and vector processors excel at control flow, but struggle to guarantee determinism. This is where FPGAs and ASICs come to play as critical enabling technologies for robotics systems.

Robotics Compute Architectures

Consider robotics systems utilizing Robot Operating System, which is becoming increasingly common for robotics development of all types. With ROS, robotics processes are designed as nodes in computational graphs. Robotics compute platforms must be able to map these graph-like structures to silicon efficiently.

ROS computational graphs should run across compute substrates in a seamless manner, and data must flow from programmable logic (FPGAs) to CPUs, from CPUs to vector processors, and all the way back. In other words, robotics chips should map ROS computational graphs not just to CPUs, but also to FPGAs, GPUs and other compute technologies to obtain additional performance.

Optimal Compute Resource(s)

Opposed to the traditional, CPU centric, robotics programming model, the availability of additional compute platforms provides engineers with a high level of architectural flexibility. Roboticists can exploit the properties of the various compute platforms – determinism, power consumption, throughput, etc. – by selecting, mixing and matching the right compute resource(s) depending on need.