Optimus robot isn't anything new but the team behind it believes that "their brain" is the key to conquer humanoids. This article takes a closer look at the public information available on their hardware and software computer architecture, and the evolution behind it. When compared to Optimus' latest "Bot Brain", existing open source software and hardware alternatives present a much more appealing starting point for building humanoid robots. In particular the Robot Operating System (ROS) could save them hundreds of engineering years in software development and the Robotic Processing Unit hardware provides 7.5x AI Performance capabilities of Tesla's "Bot Brain". Tesla could do lots of good joining the robotics community trends and contributing instead of reinventing the wheel in software and hardware.

Over the last few days Tesla seems to own the robotics discussion. The main topic is Tesla's latest robot: Optimus, a humanoid prototype. While it's exciting to have colleagues from other domains notice our work in robotics, it's also rather frustating to see how certain claims damage our field sending false and unrealistic expectations. Some others have already written about this [1] [2].

One of the relevant messages from Tesla is that their "robot brains" are somehow going to outperform the existing technology and help their robots interact better with the real world. In their talk, representatives of Tesla claimed the following:

For Better or Worse, Tesla Bot Is Exactly What We Expected Tesla fails to show anything uniquely impressive with its new humanoid robot prototype https://spectrum.ieee.org/tesla-optimus-robot ↩︎

What Robotics Experts Think of Tesla’s Optimus Robot Roboticists from industry and academia share their perspectives on Tesla’s new humanoid https://spectrum.ieee.org/robotics-experts-tesla-bot-optimus ↩︎

Tesla: You’ve all seen very impressive humanoid robot demonstrations, and that’s great, but what are they missing? They’re missing a brain—they don’t have the intelligence to navigate the world by themselves.

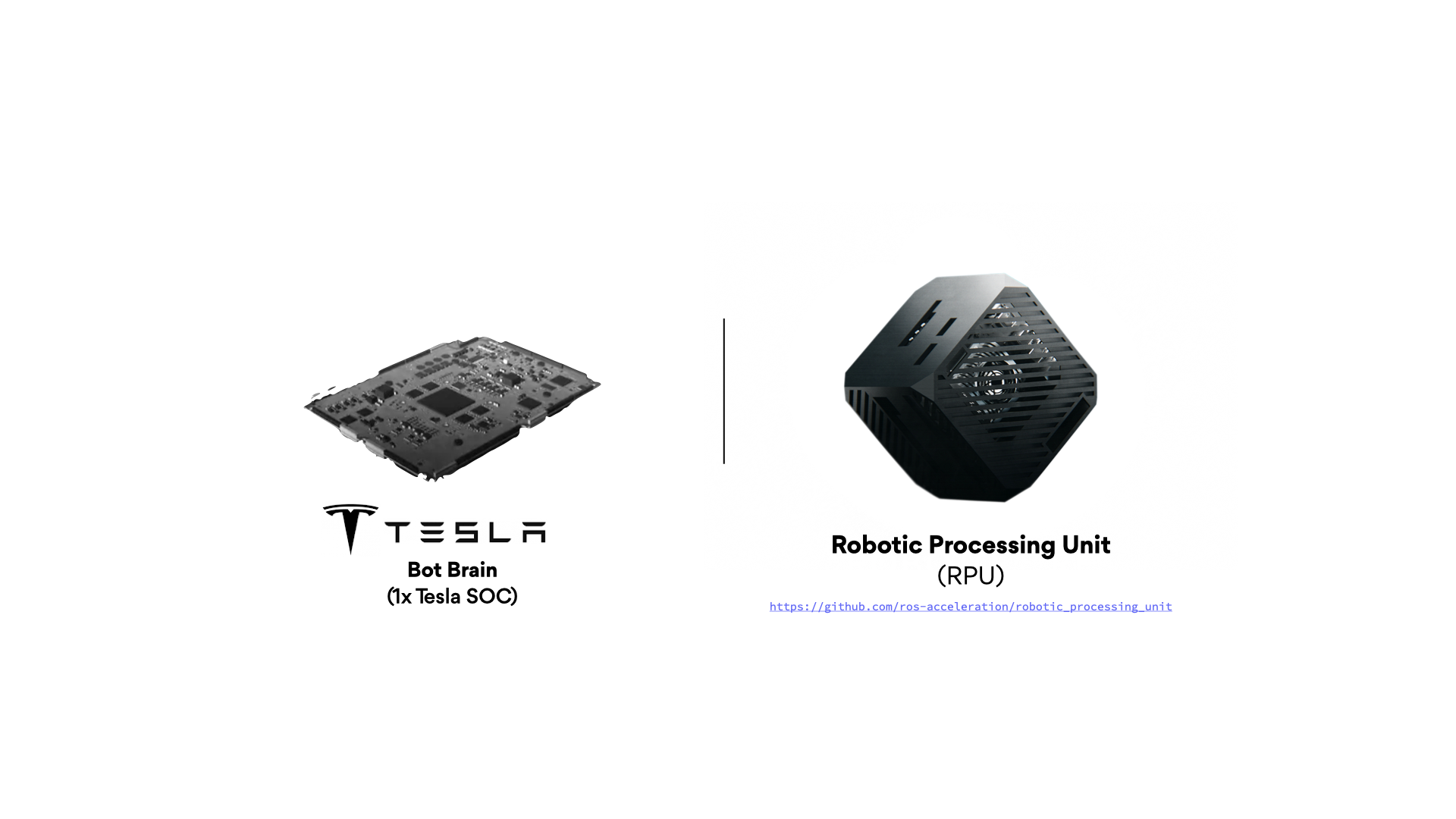

Since it's about robot brains, let's look at things from a hardware and software computer architecture perspective and see how it compares to one of our sponsored open projects to build robot brains, the Robotic Processing Unit.

Optimus' robot brain from a hardware perspective

When considering a robot's computer architecture, one should account for the fact that robots are inherently deterministic machines. Meeting time deadlines in their computations (real-time) is the most important feature. Other characteristics are also of relevance while designing robotic computations including the time between the start and the completion of a task (latency), the total amount of work done in a given time (bandwidth or throughput) or that a task happens in exactly the same timeframe, each time (determinism).

Optimus's brain in 2021: Tesla's FSD

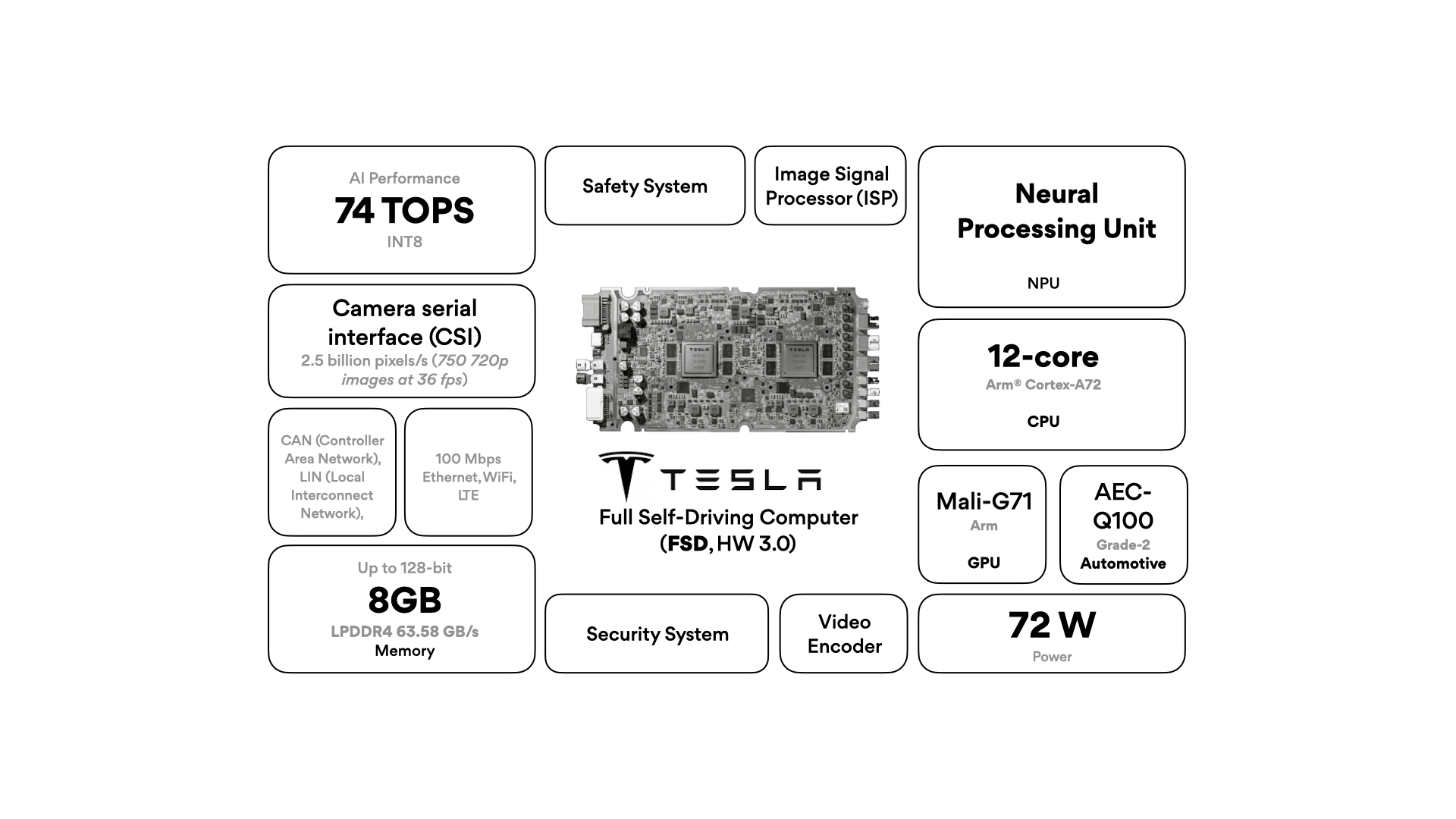

During their first presentation (Tesla AI Day 2021), Tesla claimed that Optimus would build on their Full Self-Driving Computer (FSD) hardware, presumably HW 3.0 [1], which introduced the new Tesla SOC[2]. This came to a surprise to many of us since after all, building different types of robots often requires significantly different hardware and architectures.

building different types of robots often requires significantly different hardware and architectures.

Tesla Autopilot, https://en.wikipedia.org/wiki/Tesla_Autopilot#Hardware_3 ↩︎

Tesla SOC or FSD chip https://en.wikichip.org/wiki/tesla_(car_company)/fsd_chip ↩︎

Tesla's FSD (HW 3.0) doesn't seem suited to drive the sensors and actuators you can expect to find in a capable humanoid robot. The engineering team certainly acknowledged this and presented a new brain for Optimus, the so called "Bot Brain".

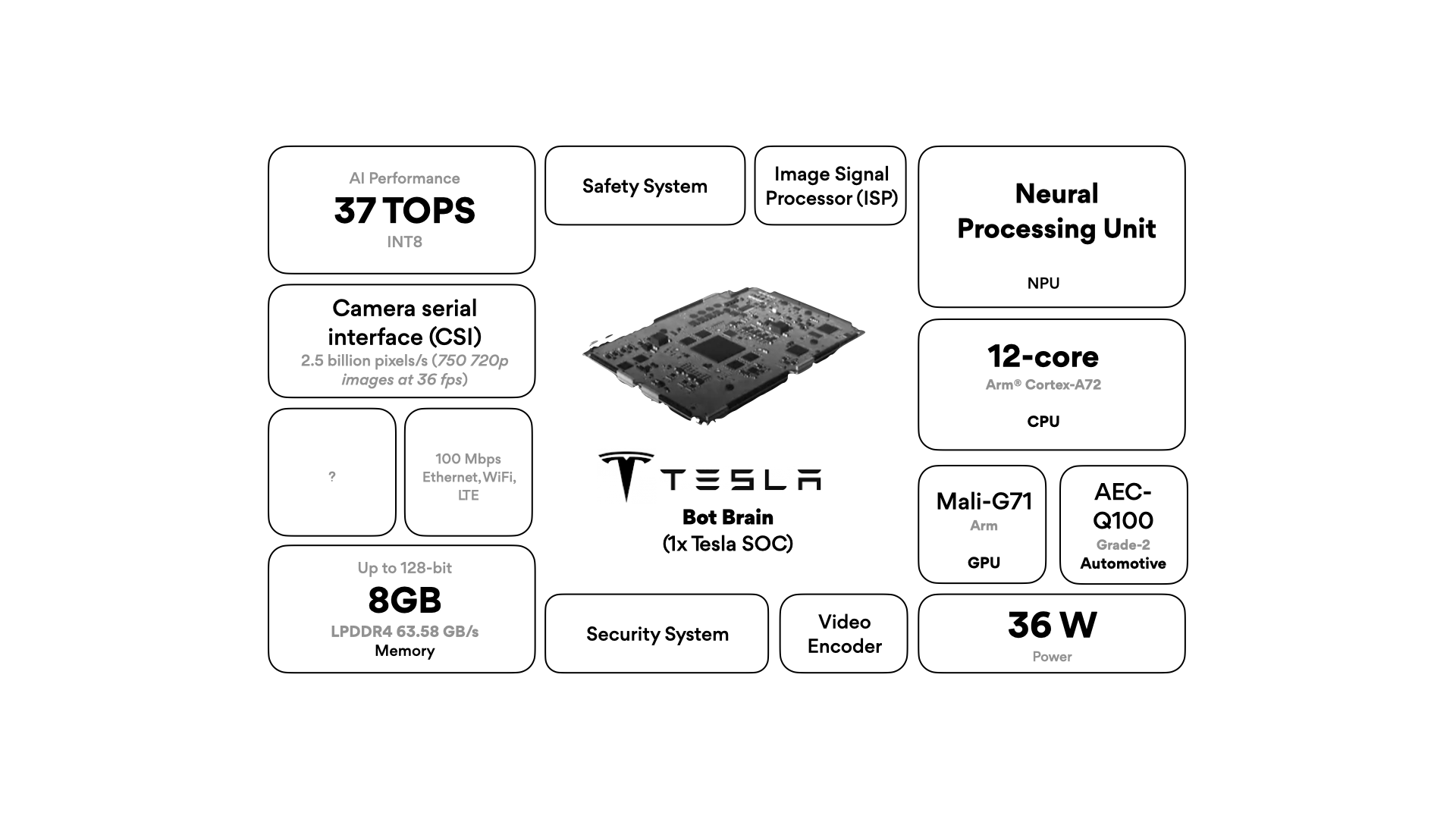

Optimus's brain in 2022: enter the "Bot Brain", 1x Tesla SOC

In the recent presentation (Tesla AI Day 2022), Tesla pivoted slightly from FSD into a new embedded device. Instead of claiming FSD hardware, Optimus' robot brain (the "Bot Brain") is now based on a 1x Tesla SOC and presumably exposes additional I/O to drive all the sensors and actuators.

There's not much public information available, so much of the depiction above is guess-work based on the Tesla SOC specs. An obvious observation is the downscale of compute capabilities and the power reduction which result from the use of 1x Tesla's SOC, instead of two.

Looking at the changes that the robot brain hardware suffered within the first year, it's highly like that we'll see more of these in the near term. After all, there's much learning Tesla should do for building humanoids, and they are rapidly realizing that machine learning might not be the silver bullet they've been promising for building robots (e.g. there's no machine learning used for their latest locomotion approach, but rather trajectory optimization using reference controllers).

Software remains the biggest challenge most of us see in robotics today.

Optimus' robot brain from a software perspective

Robot behaviors generally take the form of computational graphs, with data flowing between computation Nodes, across physical networks (communication buses) and while mapping to underlying sensors and actuators.The popular choice to build these computational graphs for robots these days is the Robot Operating System (ROS), a framework for robot application development. ROS enables you to build computational graphs and create robot behaviors by providing libraries, a communication infrastructure, drivers and tools to put it all together. Most companies building real robots today use ROS or similar event-driven software frameworks. ROS is thereby the common language in robotics, with several hundreds of companies and thousands of developers using it everyday. ROS 2 was redesigned from the ground up to address some of the challenges in ROS and solves many of the problems in building reliable robotics systems.

the popular choice to build these computational graphs for robots these days is the Robot Operating System (ROS)

ROS 2 presents a modern and popular framework for robot application development most silicon vendor solutions support, and with a variety of modular packages, each implementing a different robotics feature that simplifies performance benchmarking in robotics.

Tesla doesn't seem to be using ROS. Instead, it appears to be using software components developed for its self-driving vehicles and porting them to the humanoid robot’s domain. This suspicion gets further confirmed by their approach explained in their talk, wherein their engineering team explained on stage well known basic software aspects for locomotion, motion control and state estimation or manipulation. This hints that they are indeed re-inventing the wheel and re-implementing techniques, instead of leveraging existing ROS packages that are known to work for many of these tasks. Given the many software challenges still unresolved for humanoid robots, it's dissapointing to see Tesla trying to re-implement everything by themselves. Were they to embrace ROS and its community, they could leverage hundreds of engineering-years and align with an active and vibrant community. Tesla could do lots of good joining the robotics community trends and contributing, instead of reinventing the wheel in software and hardware.

it's dissapointing to see Tesla trying to re-implement everything by themselves. Were they to embrace ROS and its community, they could leverage hundreds of engineering-years and align with an active and vibrant community. Tesla could do lots of good joining the robotics community trends and contributing, instead of reinventing the wheel in software and hardware.

The Robotic Processing Unit, a ROS-specialized processing unit for the robotics architect

One other project that Tesla engineers could consider is the Robotic Processing Unit, a robot-specific processing device that uses hardware acceleration and maps robotics computations efficiently to its CPUs, FPGAs and GPUs to obtain best performance. In particular, it specializes in improving the Robot Operating System (ROS 2) with hardware acceleration capabilities.

Hardware acceleration has the potential to revolutionize robotics, enabling new applications by speeding up robot response times while remaining power-efficient. However, the diversity of acceleration options makes it difficult for roboticists to select the right computational resource for each task.

As a device built with robot-specific computations in mind, the Robotic Processing Unit (RPU) shows more versatility and clear advantages when compared with Tesla's Full Self-Driving Computer (FSD, HW 3.0) delivering a 3.75x in AI Performance, or a 7.5x when compared with Tesla's "Bot Brain".

From real-time capabilities to peripherals and going through power consumption, the computer architecture of Tesla's robot brain underwhelms. Neither software nor hardware's approach seem to be ready to provide the "intelligence to navigate the world by themselves". Not for their cars, and much less for their humanoids.