This article describes the architectural pillars and conventions required to introduce hardware acceleration in ROS 2 in a scalable and technology-agnostic manner. The content is inspired by the lastest draft of REP-2008 which is available at the REP-2008 Pull Request. In particular, this update includes improvements and the addition of a fourth pillar, cloud extensions, which allow bringing the hardware acceleration capabilities introduced in ROS 2 graphs also directly to the cloud.

Motivation

With the decline of Moore’s Law, hardware acceleration has proven itself as the answer for achieving higher performance gains in robotics [1]. By creating specialized compute architectures that rely on specific hardware (i.e. through FPGAs or GPUs), hardware acceleration empowers faster robots, with reduced computation times (real fast, as opposed to real-time), lower power consumption and more deterministic behaviours[2] [3]. The core idea is that instead of following the traditional control-driven approach for software development in robotics, a mixed control- and data-driven one allows to design custom compute architectures that further exploit parallelism. To do so, we need to integrate in the ROS 2 ecosystem external frameworks, tools and libraries that facilitate creating parallel compute architectures.

The purpose of REP-2008 is to provide standard guidelines for how to use hardware acceleration in combination with ROS 2. These guidelines are realized in the form of a series of ROS 2 packages that integrate these external resources and provide a ROS 2-centric open architecture for hardware acceleration.

The architecture proposed extends the ROS 2 build system (ament), the ROS 2 build tools (colcon) and add a new firmware pillar to simplify the production and deployment of acceleration kernels. The architecture is agnostic to the computing target (i.e. considers support for edge, workstation, data center or cloud targets), technology-agnostic (considers initial support for FPGAs and GPUs), application-agnostic and modular, which enhances portability to new hardware solutions and other silicon vendors. The core components of the architecture are disclosed under an Apache 2.0 license, available and maintained at the ROS 2 Hardware Acceleration Working Group GitHub organization.

Value for stakeholders:

- Package maintainers can use these guidelines to integrate hardware acceleration capabilities in their ROS 2 packages.

- Consumers can use the guidelines in the REP, as well as the corresponding category of each hardware solution, to set expectations on the hardware acceleration capabilities that could be obtained from each vendor's hardware solution.

- Silicon vendors and solution manufacturers can use these guidelines to connect their firmware and technologies to the ROS 2 ecosystem, obtaining direct support for hardware acceleration in all ROS 2 packages that support it.

The outcome of REP-2008 should be that maintainers who want to leverage hardware acceleration in their packages, can do so with consistent guidelines and with support across multiple technologies (FPGAs and GPUs initially, but extending towards more accelerators in the future) by following the conventions set. This way, maintainers will be able to create ROS 2 packages with support for hardware acceleration that can run across hardware acceleration technologies, including FPGAs and GPUs.

In turn, the documentation of categories and hardware acceleration capabilities will improve. The guidelines in here provide a ROS 2-centric open architecture for hardware acceleration, which silicon vendors can decide to adopt when engaging with the ROS 2 community.

Goals

REP-2008 aims to describe the architectural pillars and conventions required to introduce hardware acceleration in ROS 2 in a scalable and technology-agnostic manner. A set of categories meant to classify supported hardware acceleration solutions is provided. Inclusion in a category is based on the fulfillment of the hardware acceleration capabilities.

Antigoals

The motivation behind REP-2008 does not include:

- Dictating policy implementation specifics on maintainers to achieve hardware acceleration

- Policy requirements (which framework to use, specific C++ preprocessor instructions, etc.) are intentionally generic and to remain technology-agnostic (from a hardware acceleration perspective).

- Maintainers can come up with their own policies depending on the technology targeted (FPGAs, GPUs, etc.) and framework used (

HLS,ROCm,CUDA, etc).

- Enforcing specific hardware solutions on maintainers

- No maintainer is required to target any specific hardware solution by any of the guidelines in REP-2008.

- Instead, maintainers are free to choose among any of the supported technologies and hardware solutions.

Architecture pillars

ROS 2 stack Hardware Acceleration Architecture @ ROS 2 stack

+-----------+ +---------------------------+

| | | acceleration_examples |

|user land | +-----------------+------------------+--+-----------------+

| | | Drivers | Libraries | Firmware |Cloud |

+-----------+ +-----------------+-+-------------------------+-----------+

| | | ament_1| ament_2 | | | | |

| | +---------------------------------------+ fw_1|fw_2|cloud1|

| tooling | | ament_acceleration|colcon_acceleration| | | |

| | +---------------------------------------------+-----------+

| | | build system | meta build | firmware | cloud|

+-----------+ +--------+----------+-------+-----------+-+--------+--+---+

| rcl | | | | |

+-----------+ | | | |

| rmw | | | | |

+-----------+ + + + +

|rmw_adapter| Pillar I Pillar II Pillar III Pillar IV

+-----------+

Pillar I - Extensions to ament

The first pillar represents extensions of the ament ROS 2 build system. These CMake extensions help achieve the objective of simplifying the creation of acceleration kernels. By providing an experience and a syntax similar to other ROS 2 libraries targeting CPUs, maintainers will be able to integrate kernels into their packages easily. The ament_acceleration ROS 2 package abstracts the build system extensions from technology-specific frameworks and software platforms. This allows to easily support hardware acceleration across FPGAs and GPUs while using the same syntax, simplifying the work of maintainers. The code listing below provides an example that instructs the CMakeLists.txt file of a ROS 2 package to build a vadd kernel with the corresponding sources and configuration:

acceleration_kernel(

NAME vadd

FILE src/vadd.cpp

INCLUDE

include

)

Under the hood, each specialization of ament_acceleration should rely on the corresponding technology libraries to enable it. For example, ament_vitis relies on Vitis Unified Software Platform and on the Xilinx Runtime (XRT) library to generate acceleration kernels and facilitate OpenCL communication between the application code and the kernels. Vitis and XRT are completely hidden from the robotics engineer, simplifying the creation of kernels through simple CMake macros. The same kernel expressed with ament_vitis is presented below:

vitis_acceleration_kernel(

NAME vadd

FILE src/vadd.cpp

CONFIG src/kv260.cfg

INCLUDE

include

TYPE

hw

PACKAGE

)

While ament_acceleration CMake macros are preferred and will be encouraged, maintainers are free to choose among the CMake macros available. After all, it'll be hard to define a generic set of macros that fits all use cases across technologies.

Through ament_acceleration and technology-specific specializations (like ament_vitis), the ROS 2 build system is automatically enhanced to support producing acceleration kernel and related artifacts as part of the build process when invoking colcon build. To facilitate the work of maintainers, this additional functionality is configurable through mixins that can be added to the build step of a ROS 2 workspace, triggering all the hardware acceleration logic only when appropriate (e.g. when --mixin kv260 is appended to the build effort, it'll trigger the build of kernels targeting the KV260 hardware solution). For a reference implementation of these enhacements, refer to ament_vitis.

Pillar II - Extensions to colcon

The second pillar extends the colcon ROS 2 meta built tools to integrate hardware acceleration flows into the ROS 2 CLI tooling and allow to manage it. Examples of these extensions include emulation capabilities to speed-up the development process and/or facilitate it without access to the real hardware, or raw image production tools, which are convenient when packing together acceleration kernels for embedded targets. These extensions are implemented by the colcon_acceleration ROS 2 package. On a preliminary implementation, these extensions are provided the following colcon acceleration subverbs:

colcon acceleration subverbs:

board Report the board supported in the firmware

emulation Manage hardware emulation capabilities

hls Vitis HLS capabilities management extension

hypervisor Configure the Xen hypervisor

linux Configure the Linux kernel

list List supported firmware for acceleration

mkinitramfs Creates compressed cpio initramfs from image

mount Mount raw images

package Packages workspace with kernels for distribution

platform Report the platform enabled in the firmware

select Select an existing firmware and default to it.

umount Umount raw images

v++ Vitis v++ compiler wrapper

version Report version of the tool

Using the list and select subverbs, it's possible to inspect and configure the different hardware acceleration solutions. The rest of the subverbs allow to manage hardware acceleration artifacts and platforms in a simplified manner.

In turn, the list of subverbs will improve and grow to cover other technology solutions.

Pillar III - firmware

The third pillar is firmware, it is meant to provide firmware artifacts for each supported technology so that the kernels can be compiled against them, simplifying the process for consumers and maintainers, and further aligning with the ROS typical development flow.

Each ROS 2 workspace can have one or multiple firmware packages deployed. The selection of the active firmware in the workspace is performed by the colcon acceleration select subverb (pillarII_). To get a technology solution aligned with REP-2008's architecture, each vendor should provide and maintain an acceleration_firmware_<solution> package specialization that delivers the corresponding artifacts in line with its supported categories_ and capabilities_. Firmware artifacts should be deployed at <ros2_workspace_path>/acceleration/firmware/<solution> and be ready to be used by the ROS 2 build system extensions at (pillarI_) . For a reference implementation of specialized vendor firmware package, see acceleration_firmware_kv260.

By splitting vendors across packages, consumers and maintainers can easily switch between hardware acceleration solutions.

Pillar IV - cloud extensions

Leveraging the cloud provides roboticists with unlimited resources to further accelerate computations. Besides lots of CPU, cloud computing providers such as GCP, Azure or AWS offer instances that provide big FPGAs and GPUs for on-cloud hardware acceleration. This means once the ROS graph is partially in the cloud, architects can use computing there, including custom accelerators, to reduce and optimize robotic computations. But tapping into all that power while aligned with common ROS and robotics development flows is non-trivial.

This fourth pillar, cloud extensions, helps robotic architects bridge the gap and simplify the use of hardware acceleration in the cloud for ROS. It does so by extending the three prior pillars and adding cloud capabilities to them. Ultimately, the cloud extensions allow to easily build hardware acceleration kernels that target cloud instances while aligning to the unified APIs for cloud provisioning, set up, deployment and launch derived from the standard ROS 2 launch system.

In turn, a reference implementation leveraging hardware acceleration in the cloud with ROS 2 will be facilitated with one of the cloud service providers.

Specification

To drive the creation, maintenance and testing of acceleration kernels in ROS 2 packages that are agnostic to the computing target (i.e. consider support for edge, workstation, data center or cloud targets) and technology-agnostic (considers initial support for FPGAs and GPUs), REP-2008 builds on top of open standards. Particularly, OpenCL 1.2 ([4], [5]) is encouraged for a well established standardized interoperability between the host-side (CPU) and the acceleration kernel. Unless stated otherwise, the hardware acceleration terminology used in this document follows the OpenCL nomenclature for hardware acceleration.

A ROS 2 package supports hardware acceleration if it provides support for at least one of the supported hardware acceleration solutions that comply with REP-2008.

A hardware acceleration solution from a given vendor is supported if it at least has a Compatible category.

Kernel levels in ROS 2

To favour modularity, organize kernels and allow robotics architects to select only those accelerators needed to meet the requirements of their application, acceleration kernels in ROS 2 will be classified in 3 levels according to the ROS layer/underlayer they impact:

- Level 1 - ROS 2 applications and libraries: This group corresponds with acceleration kernels that speed-up OSI L7 applications or libraries on top of ROS 2. Any computation on top of ROS 2 is a good a candidate for this category. Examples include selected components in the navigation, manipulation, perception or control stacks.

- Level 2 - ROS 2 core packages: This includes kernels that accelerate or offload OSI L7 ROS 2 core components and tools to a dedicated acceleration solution (e.g. an FPGA). Namely, we consider

rclcpp,rcl,rmw, and the correspondingrmw_adaptersfor each supported communication middleware. Examples includes ROS 2 executors for more deterministic behaviours [6], or complete hardware offloaded ROS 2 Nodes [7].

- Level 3 - ROS 2 underlayers: Groups together all accelerators below the ROS 2 core layers belonging to OSI L2-L7, including the communication middleware (e.g. DDS). Examples of such accelerators include a complete or partial DDS implementation, an offloaded networking stack or a data link layer for real-time deterministic, low latency and high throughput interactions.

Hardware acceleration solutions complying with REP-2008 should aspire to support multiple kernel levels in ROS 2 to maximize consumer experience.

Benchmarking

Benchmarking is the act of running a computer program to assess its relative performance. In the context of hardware acceleration, it's fundamental to assess the relative performance of an acceleration kernel versus its CPU scalar computing baseline. Similarly, benchmarking helps comparing acceleration kernels across hardware acceleration technology solution (e.g. FPGA_A vs FPGA_B or FPGA_A vs GPU_A, etc.) and across kernel implementations (within the same hardware acceleration technology solution).

There're different types of benchmarking approaches. The following diagram depicts the most popular inspired by [8]:

Probe Probe

+ +

| |

+--------|------------|-------+ +---------------------------+

| | | | | |

| +--|------------|-+ | | |

| | v v | | | - latency <---------+ Probe

| | | | | - throughput<---------+ Probe

| | Function | | | - memory <---------+ Probe

| | | | | - CPU <---------+ Probe

| +-----------------+ | | |

| System under test | | System under test |

+-----------------------------+ +---------------------------+

Functional Non-functional

+-------------+ +-------------------------+

| Test App. | | +--------------------+ |

| + + + + | | | Application | |

+--|-|--|--|--+---------------+ | | <------+ Probe

| | | | | | | +--------------------+ |

| v v v v | | |

| Probes | | <------+ Probe

| | | |

| System under test | | System under test |

| | | <------+ Probe

| | | |

| | | |

+-----------------------------+ +-------------------------+

Black-Box Grey-box

In addition, the following aspects should be considered when benchmarking acceleration kernels in ROS 2:

embedded: Benchmarks should run in embedded easilyROS 2-native: Benchmarks should consider the particularities of ROS 2 and its computational graph. If necessary, they should instrument the communications middleware and its underlying layers.intra-process, inter-process and intra-network: Measures conducted should consider communication within a process in the same SoC, between processes in an SoC and between different SoCs connected in the same network (intra-network). Inter-network measures are beyond the scope of REP-2008.compute substrate-agnostic: benchmarks should be able to run on different hardware acceleration technology solutions. For that purpose, a CPU-centric framework (as opposed to an acceleration technology-specific framework) for benchmarking and/or tracing is the ideal choice.automated: benchmarks and related source code should be designed with automation in mind. Once ready, creating a benchmark and producing results should be (ideally) a fully automated process.hardware farm mindset: benchmarks will be conducted on hardware embedded platforms sitting in a farm-like environment (redundancy of tests, multiple SoCs/boards) with the intent of validating and comparing different technologies.

Accounting for all of this, in REP-2008, we adopt a grey-box and non-functional benchmarking approach for hardware acceleration that allows to evaluate the relative performance of accelerated ROS 2 individual nodes and complete computational graphs. To realize it in a technology agnostic-manner, we select the Linux Tracing Toolkit next generation (LTTng) which will be used for tracing and benchmarking.

Differences between tracing and benchmarking

Tracing and benchmarking can be defined as follows:

tracing: a technique used to understand what goes on in a running software system.benchmarking: a method of comparing the performance of various systems by running a common test.

From these definitions, inherently one can determine that both benchmarking and tracing are connected in the sense that the test/benchmark will use a series of measurements for comparison. These measurements will come from tracing probes. In other words, tracing will collect data that will then be fed into a benchmark program for comparison.

Methodology for ROS 2 Hardware Acceleration

rebuild

+---------------+

| |

| |

|4. benchmark +--+

| acceleration| |

+--> | |

| +---------------+ |

| acceleration|

| tracing|

trace dataflow | |

+--------------+ | +---------------+ |

| | +---+ +<+

+------------v---+ +--------v-------+ | |

| | | | | |

| 3.2 accelerate | | 3.1 accelerate <---------> 3. hardware |

| graph | | nodes | trace | acceleration |

| | | | nodes | <-+

+----------------+ +----------------+ | | |

+---------------+ |

CPU |

tracing|

|

+--------------+ |

+----------------+ rebuild | | |

| +----------> | |

start +----------> 1. trace graph | | 2. benchmark +--+

| | | CPU |

+----+------^--^-+ | |

| | | +-------+------+

| | | |

+------+ | |

LTTng +--------------------+

re-instrument

The following proposes a methodology to analyze a ROS 2 application and design appropriate acceleration:

- instrument both the core components of ROS 2 and the target kernels using LTTng. Refer to ros2_tracing for tools, documentation and ROS 2 core layers tracepoints;

- trace and benchmark the kernels on the CPU to establish a compute baseline;

- develop a hardware accelerated implementation on alternate hardware (e.g., GPU, FPGA, etc):

- 3.1 accelerate computations at the Node or Component level for each one of those identified in 2. as good candidates.

- 3.2 accelerate inter-Node exchanges and reduce the overhead of the ROS 2 message-passing system across all its abstraction layers.

- trace, benchmark against the CPU baseline, and improve the accelerated implementation.

The proposed ROS 2 methodology for hardware acceleration is demonstrated in [9] and [10].

Acceleration examples

For the sake of illustrating maintainers and consumers how to build their own acceleration kernels and guarantee interoperability across technologies, a ROS 2 meta-package named acceleration_examples will be maintained and made available. This meta-package will contain various packages with simple common acceleration examples. Each one of these examples should support all hardware acceleration solutions complying with REP-2008.

In turn, a CI system will be set to build periodically and for every commit the meta-package.

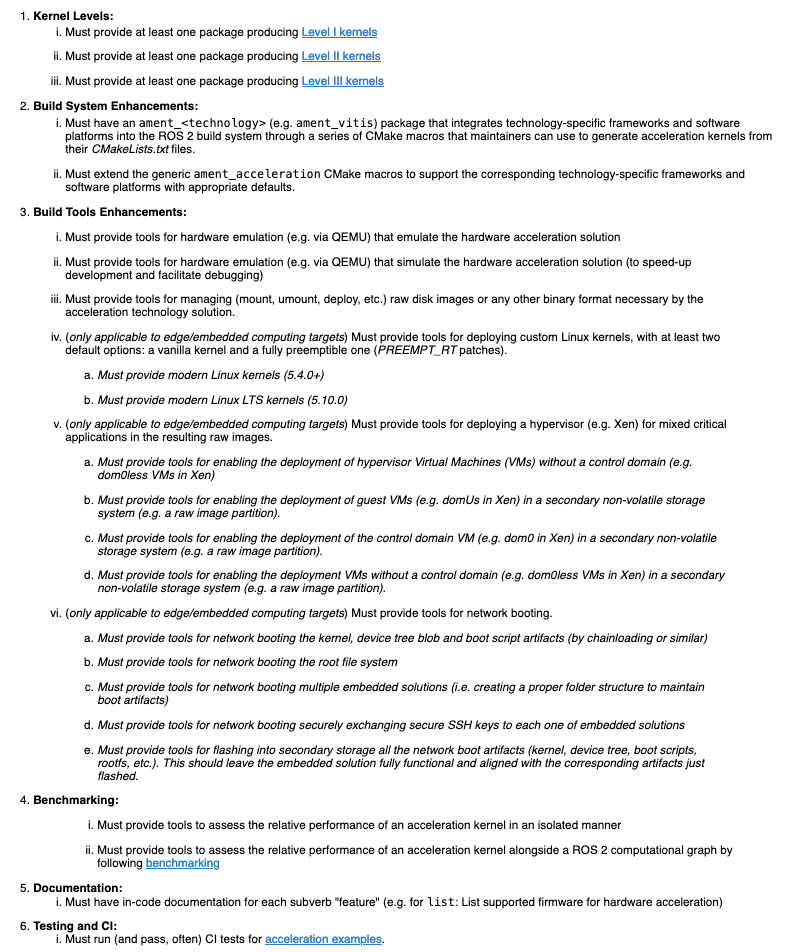

Capabilities

The following list describes the hardware acceleration capabilities that hardware acceleration vendors must consider when connecting their firmware and technology solutions to the ROS 2 ecosystem.

Categories

There are 4 hardware acceleration categories below which will classify hardware acceleration solutions and technologies, each roughly summarized as:

- Category Official:

- Highest level of support, backed by a vendor

- Hardware acceleration solution compliant with REP-2008 and fully integrated in the ROS 2 ecosystem.

- Developer tools available to facilitate the development process.

- All

acceleration examples_ should run on the hardware acceleration solution. - Acceleration kernels available for multiple

Kernel Levels, with at leastLevel I kernels.

- Category Community:

- Community-level support

- Hardware acceleration solution compliant with only a subset of REP-2008.

- A subset of the developer tools available.

- Acceleration kernels available for at least

Level I kernels_.

- Category Compatible:

- Interoperability demonstrated.

- Hardware acceleration solution compliant with a lesser subset of REP-2008.

- Some developer tools available.

- Acceleration kernels available for at least

Level I kernels_.

- Category Non-compatible:

- Default category

While each category will have different capabilities, it's always possible to overachieve in certain capabilities, even if other capabilities prevent a package from moving up to the next category level.

Category Comparison Chart

The chart below compares Quality Levels 1 through 5 relative to the 'Level 1' requirements' numbering scheme above.

- ✓ = required

- ● = recommended

- ○ = optional

| Official | Community | Compatible | Non-compatible | |

|---|---|---|---|---|

| Kernel Levels | ||||

| 1.i | ✓ | ✓ | ✓ | |

| 1.ii | ● | ● | ○ | |

| 1.iii | ● | ○ | ○ | |

| Build System | ||||

| 2.i | ✓ | ✓ | ✓ | |

| 2.ii | ● | ● | ○ | |

| Build Tools | ||||

| 3.i | ● | ● | ○ | |

| 3.ii | ● | ● | ○ | |

| 3.iii | ✓ | ✓ | ● | |

| 3.iv | ✓ | ✓ | ○ | |

| 3.iv.a | ✓ | ● | ○ | |

| 3.iv.b | ● | ● | ○ | |

| 3.v | ✓ | ● | ○ | |

| 3.v.a | ✓ | ● | ○ | |

| 3.v.b | ✓ | ● | ○ | |

| 3.v.c | ✓ | ● | ○ | |

| 3.v.d | ✓ | ● | ○ | |

| 3.vi | ● | ● | ○ | |

| 3.vi.a | ● | ● | ○ | |

| 3.vi.b | ● | ● | ○ | |

| 3.vi.c | ● | ● | ○ | |

| 3.vi.d | ● | ● | ○ | |

| 3.vi.e | ● | ● | ○ | |

| Benchmarking | ||||

| 4.i | ● | ● | ○ | |

| 4.ii | ● | ● | ○ | |

| Documentation | ||||

| 5.i | ✓ | ✓ | ● | |

| Testing and CI | ||||

| 6.i | ✓ | ● | ○ |

Reference Implementation and recommendations

Reference implementations complying with REP-2008 and extending the ROS 2 build system and tools for hardware acceleration are available at the Hardware Accelerationg Working Group GitHub organization. This includes acceleration_examples also the abstraction layer ament_acceleration and firmware from vendor specializalizations like ament_vitis.

For additional implementations and recommendations, check out the Hardware Accelerationg Working Group GitHub organization.

Z. Wan, B. Yu, T. Y. Li, J. Tang, Y. Zhu, Y. Wang, A. Raychowdhury, and S. Liu, “A survey of fpga-based robotic computing,”

IEEE Circuits and Systems Magazine, vol. 21, no. 2, pp. 48–74, 2021. ↩︎Mayoral-Vilches, V., & Corradi, G. (2021). Adaptive computing in robotics, leveraging ros 2 to enable software-defined hardware for fpgas.

https://www.xilinx.com/support/documentation/white_papers/wp537-adaptive-computing-robotics.pdf ↩︎Mayoral-Vilches, V. (2021). Kria Robotics Stack.

https://www.xilinx.com/content/dam/xilinx/support/documentation/white_papers/wp540-kria-robotics-stack.pdf ↩︎OpenCL 1.2 API and C Language Specification (November 14, 2012).

https://www.khronos.org/registry/OpenCL/specs/opencl-1.2.pdf ↩︎OpenCL 1.2 Reference Pages.

https://www.khronos.org/registry/OpenCL/sdk/1.2/docs/man/xhtml/ ↩︎Y. Yang and T. Azumi, “Exploring real-time executor on ros 2,”.

2020 IEEE International Conference on Embedded Software and Systems (ICESS). IEEE, 2020, pp. 1–8. ↩︎C. Lienen and M. Platzner, “Design of distributed reconfigurable robotics systems with reconros,” 2021.

https://arxiv.org/pdf/2107.07208.pdf ↩︎A. Pemmaiah, D. Pangercic, D. Aggarwal, K. Neumann, K. Marcey, "Performance Testing in ROS 2".

https://drive.google.com/file/d/15nX80RK6aS8abZvQAOnMNUEgh7px9V5S/view ↩︎"Methodology for ROS 2 Hardware Acceleration". ros-acceleration/community #20. ROS 2 Hardware Acceleration Working Group.

https://github.com/ros-acceleration/community/issues/20 ↩︎Acceleration Robotics, "Hardware accelerated ROS 2 pipelines and towards the Robotic Processing Unit (RPU)".

https://news.accelerationrobotics.com/hardware-accelerated-ros2-pipelines/ ↩︎