This article discusses breakthroughs with Qualcomm RB5 and ROS 2 Hardware Acceleration in Robotics Perception that are achieved by leveraging OpenCL to offload computation to the GPU. Benchmarking results hint speedup differences of up to 4x between ROS2 perception nodes.

In a previous article [1], we explored mapping ROS 2 perception pipeline’s computation graphs to FPGA-based hardware accelerators such as AMD FPGA SoC solutions with kernels running at 250MHz. This initial work has since then evolved [2] into a complete community, open contributions and open standards that enable and provide vendor-agnostic hardware acceleration solutions around the ROS 2 community. Products have also been created as part of the endeavour, like Acceleration Robotics’ ROBOTCORE Framework, which allows building robot IP core accelerators (or robot cores) in a vendor-agnostic manner and while focusing on a ROS 2-centric development experience.

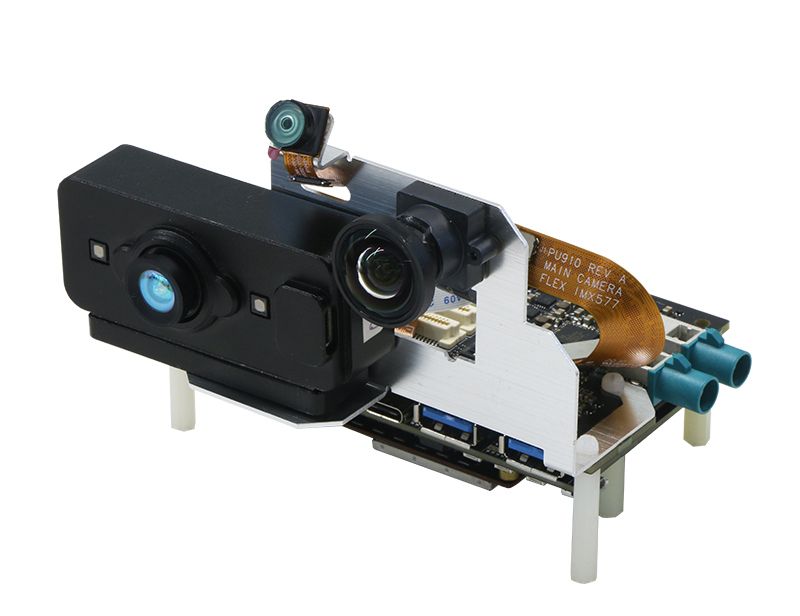

In this report, we further explore porting the open ROS 2 Hardware Acceleration Stack to GPU hardware accelerators from Qualcomm's premium-tier QRB5165 processor, designed to help build consumer, enterprise or industrial robots with on-device AI and 5G connectivity. In particular, we leverage the widely adopted Qualcomm® Robotics RB5 Development Kit.

The Qualcomm RB5 Robotics Kit is an innovative platform geared towards high-compute, low-power robotics and drone applications. It is equipped with the Qualcomm Kryo CPU, Adreno 650 GPU and the Hexagon DSP, enabling developers to combine high-performance heterogeneous compute to develop power-efficient as well as cost-effective robots.

We leverage the QRB5165’s Adreno 650 GPU to parallelize a subset of ROS 2’s image-processing pipeline using hardware acceleration, achieving speed-ups of upto 400%.

Accelerating ROS2 Perception Pipelines:

Camera output often needs preprocessing to meet the input requirements of multiple different perception functions. These can include operations such as resizing and correcting for lens distortion. Image rectification and resizing are fundamental operations in computer vision pipelines, essential for tasks like stereo vision, object detection, and depth estimation. Traditionally, these operations have been performed using software-based algorithms, which can be computationally intensive and time-consuming, particularly for high-resolution images or video streams.

By offloading these tasks to hardware-accelerated OpenCL kernels running on the Adreno 650 GPU, we were able to achieve significant performance gains with speed-ups of up to 400%.

Another benefit of offloading computation to the GPU is the ability to group image processing operations by passing data directly from one kernel to another. This mitigates the message transfer overhead during receiving input data along with the data transfer overhead from the CPU to GPU. The latter is tackled with the use of zero-copy data transfers, which enables us to map device (accelerator) memory to the host (CPU).

Our OpenCL implementation has two components:

- The kernel running on the GPU,

- The host code running on the CPU which controls the execution of the kernel through the ROS2 pipeline.

To accelerate image processing on the GPU, the images must be transferred to GPU memory. The traditional way of doing this is to copy over the images to the VRAM where the GPU can access it, perform parallelized operations on the GPU, and then copy over the result from the GPU to the CPU. However, this adds the overhead of transferring data between the GPU and the CPU over the PCIe bridge.

The OpenCL specification includes memory allocation flags and API functions that developers can use to create applications that can be used to eliminate extra copies during code execution. This is referred to as zero-copy behaviour. We were able to leverage zero-copy behaviour using Qualcomm-specific memory allocation flags in OpenCL. Furthermore, we hid the data transfer behind kernel execution through the use of multiple queues (PCIe lanes give us duplex memory transfer capabilities). This ensures that image processing happens asynchronously and that the data transfer operation overheads are hidden behind kernel runtime.

We were thus also able to significantly improve the performance of the perception pipeline (by more than 300%) by grouping image processing operations in a single node that transfers data between GPU kernels. We have enlisted this benchmark in (Table 1) as a1*: perception_2nodes_combined. The other benchmarks also enlisted below (defined in the Robotperf framework [4]) include a1: perception_2nodes, a2: image rectification and a5: image resizing. The benchmark that was run for this report was latency. (Figure 1) provides a visualisation of the results.

Table 1 : ROS2 perception pipeline runtime

| Hardware | Node | Mean (speedup) | RMS (speedup) |

|---|---|---|---|

| RB5 - Kryo 585 CPU | a1 | 132.79 ms | 132.95 ms |

| a2 | 20.5416 ms | 21.2928 ms | |

| a5 | 39.9251 | 41.4270 | |

| RB5- Adreno 650 GPU | a1 | 66.5104 ms (Δ 2.0) | 71.7921 ms (Δ 1.85) |

| a1* | 38.9777 ms (Δ 3.4) | 41.0983 ms (Δ 3.23) | |

| a2 | 17.9097 ms (Δ 1.2) | 19.8730 ms (Δ 1.1) | |

| a5 | 28.7540 ms (Δ 1.4) | 31.2265 ms (Δ 1.32) |